Horizon Workrooms is an application part of the Work Metaverse experiences. It is designed to allow users to work in immersive spaces and to mirror their computers in VR/VR to enhance their productivity.

Role

Senior Product Designer (IC)

Challenge

To bring the vision for work in the Metaverse to the present by developing new interface paradigms that enable knowledge workers to leverage AR/VR for new productivity use cases

Contributions

Researched and developed new input methods for VR to improve comfort, aid adoption, and increase satisfaction for users across all VR/AR use cases

Worked to abstract complexity inherent in spatial computing to create new features and ways of working in VR that are easy to use and desirable for knowledge work users

Contributed to large redesign application projects to include new, more flexible ways to work in augmented reality

Worked with researchers and PMs to identify gaps and opportunities and communicated them to other teams at Meta

Impact

Developed concepts from early-stage ideas to concrete hardware/software specs, directly influencing Meta's hardware roadmap

Disclaimer: The images and content only exemplify the nature of my work. To comply with NDA requirements, I composed new images to illustrate the concepts without disclosing any specific project information.

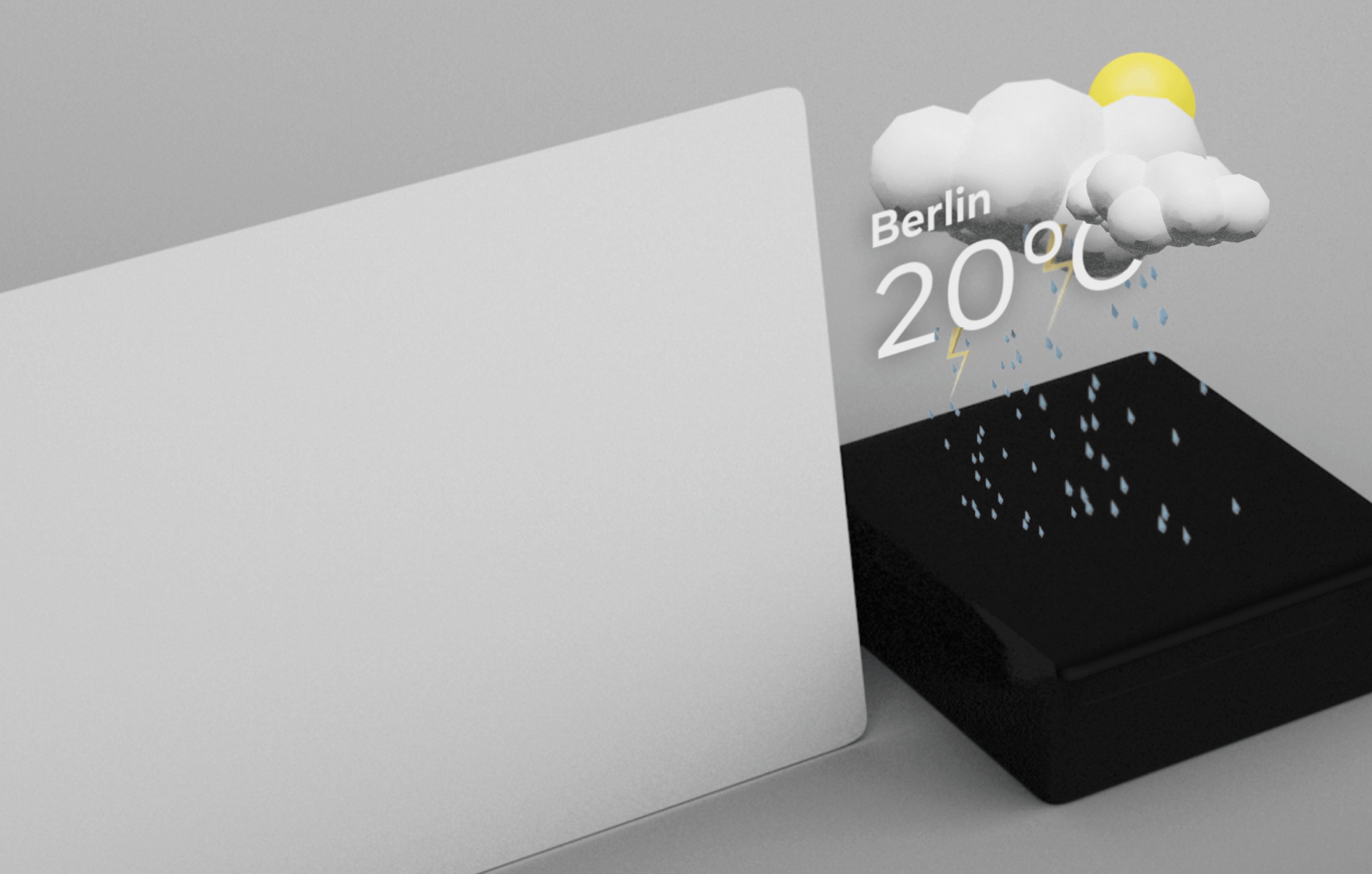

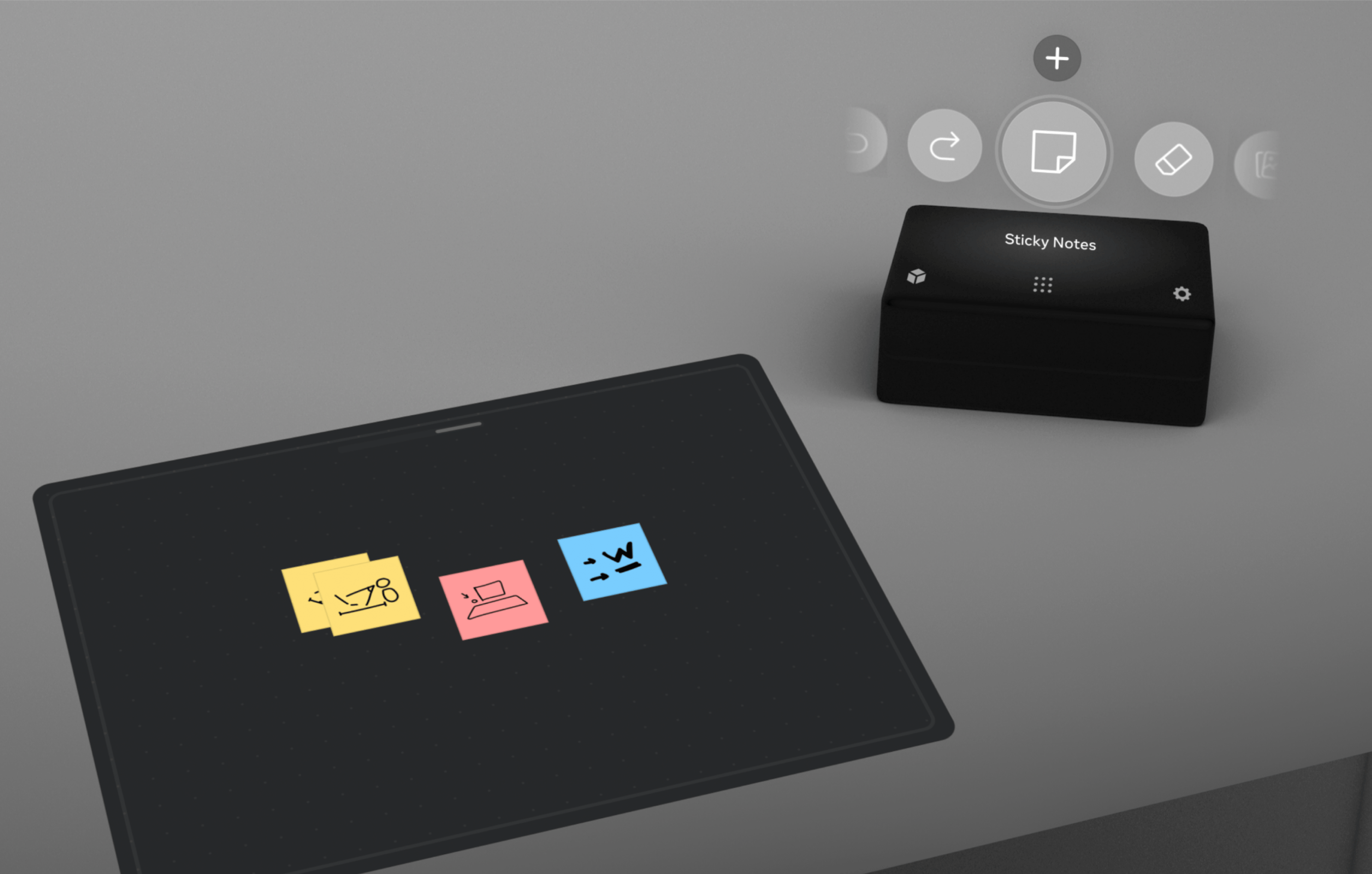

While working with a special team at Reality Labs dedicated to developing new AR-based concepts and products, I explored new hardware concepts to improve usability and flexibility in VR.

I worked to envision alternative designs that were developed specifically for the paradigm of spatial computing. After gathering many user insights regarding VR us, I concluded that today's controllers left many UX challenges unsolved.

A critical condition for the wider VR adoption lies in allowing users to transpose their experiences with phones and tablets to VR much more quickly without high learning curves. Therefore, I chose to focus on concepts that leverage existing mental models while creating new opportunities for the Quest Ecosystem. More specifically, I concentrated on usability-focused metrics such as ease of use and precision, user satisfaction, playfulness, and user flow.

Instead of measuring the success of new input methods in existing applications (e.g., games, immersive worlds), I was focused on unlocking new experiences and applications that are extremely hard to use today.

While exploring solutions, I paid great attention to the growing AR/VR divide. Today, when users transition from VR to AR, it's expected that virtual screens that work well in VR don't sit well with overlaid onto their physical environment. Similarly, you can be immersed in VR and accidentally bump into a hot cup of coffee you forgot on your table. Again, users often mentioned that the immersion afforded by VR kept them from using VR altogether because it would keep them away from their world of notifications and quick access to applications they need with them. Problems such as these prevent users from genuinely taking advantage of this new computing paradigm.

To solve these problems, I designed solutions and methods allowing users to quickly navigate from VR to AR while maintaining a 1:1 spatial mapping from virtual to personal physical spaces. These solutions ranged from active objects that worked to anchor interactions in space. I focused both on spatial awareness and tactical and playful interactions that allowed users to quickly access content from other applications in the Quest Ecosystem to give them complete control of their experience.

If AR is to be adopted, it must be tactile and easy to grasp. We love our phones and computers because they are part of us; we can feel and move them. Similarly, we need easy and intuitive ways to control AR augmentations in meaningful ways. Because AR augmentations can exist on any surface and quickly lead to cognitive overload, users need to remain in control and not feel overwhelmed. This vision of AR is inherently different from what AR glasses seek to propose. AR glasses are HUD (heads-up displays) and don't often focus on ambient or spatially oriented interfaces.

The result of this work has impacted Meta's hardware roadmap, and other hardware OS teams in the organization received it well.